Sections

CMP Dataset (Version 3.1)

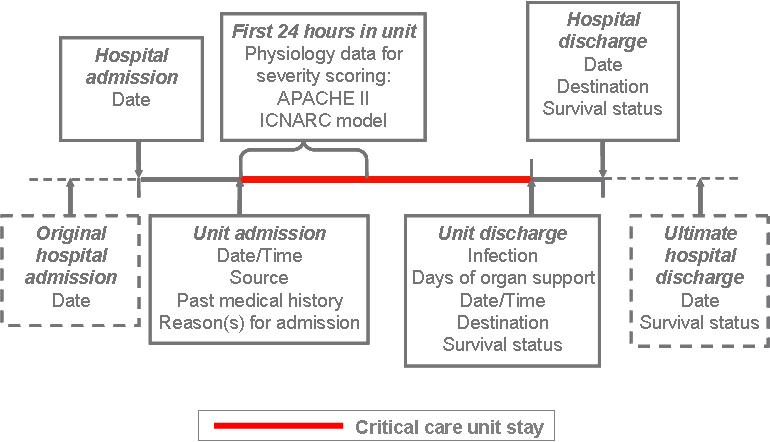

An overview of the Case Mix Programme (CMP) Dataset (Version 3.1) is provided below:

CMP data processing

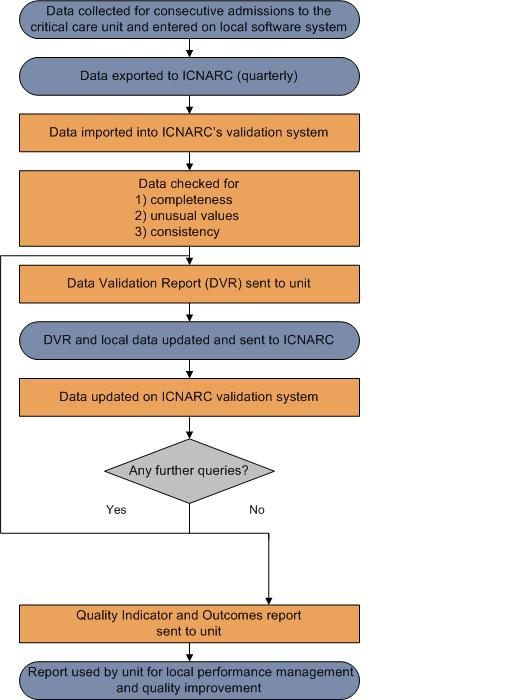

An overview of the Case Mix Programme (CMP) process for the collection, entry, submission, validation, analysis and reporting of CMP data is shown in the diagram below:

Presentation of results

Funnel plots

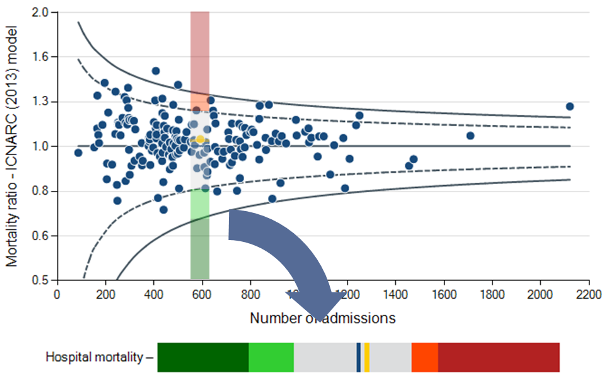

Potential quality indicators are presented in a funnel plot format.

The grey lines represent control limits at two standard deviations (2SD) represented by a dashed line, and three standard deviations (3SD) represented by a solid line. Selected units are highlighted in orange.

As the number of admissions increases, the precision with which a result can be calculated increases.

If variation between results is random then on average 95% of points should lie within the inner control limits (2SD) and 99.8% should lie within the outer control limits (3SD).

Example – Hospital mortality

If the model is perfectly predicting hospital mortality, we expect the points to form a funnel shape centred on a mortality ratio of one (1.0).

Delayed Discharges - 12 and 24 hours

Please note the potential quality indicators relating to delayed discharges are not displayed as funnel plots. For these indicators, the dashed line indicates the 5% of critical care units with the highest rates.

CMP Quality Indicator Dashboard

The Case Mix Programme (CMP) Quality Indicator Dashboard summarises the results of each quality indicator for a single NHS adult, general critical care unit. Note that the dashboard can only be viewed if a specific unit is selected from the drop-down menus at the top of the page, for all other selections (hospital, trust, network or SHA) nothing will be shown.

Each row of the dashboard can be viewed as a 'slice' through the corresponding funnel plot (see below) showing the unit's own value for that potential quality indicator relative to the comparator value and two and three standard deviation lines.

For Hospital mortality, the comparator value is one (1.0), corresponding to observed acute hospital mortality equal to that expected from the ICNARC (2013) model.

For Unit-acquired infection in blood, Non-clinical transfers (out), Out-of-hours discharges to the ward, Daytime discharges to the ward , Delayed discharges (24 hour delay) and Unplanned readmissions within 48 hours, the agreed threshold value is based on the median across all participating CMP units in 2012/13 as published by the Clinical Reference Group (CRG) for specialised commissioning .

For Unit-acquired MRSA, Non-clinical transfers (out), Out-of-hours discharges to the ward (not delayed), Delayed discharges (12 hour delay) and Unplanned readmissions within 48 hours, the comparator value is the mid line (i.e. the overall percentage in all critical care units in the CMP for the time period of the report).

If the unit's value (orange line) falls within the light green or dark green regions of the dashboard, this corresponds to a value below the lower two standard deviation or three standard deviation lines of the funnel plot, respectively. If the unit's value falls within the dark orange or red regions, this corresponds to a value above the upper two standard deviation or three standard deviation lines, respectively.

Note that in some cases (particularly for small sample sizes or where an indicator exhibits a large amount of variation across critical care units), the green and/or orange/red regions may not be present.

Clinical Reference Group (CRG)

The Clinical Reference Group (CRG) for adult critical care is responsible for specialised commissioning for critical care services.

ICNARC is working in conjunction with the CRG for Adult Critical Care to support specialised commissioning through the provision of data analyses from participating CMP units in a publically available format.

This information forms part of the Annual Quality Report in the form of a ‘dashboard’ for each critical care unit, indicating whether or not they meet the agreed threshold for each indicator.

Please note that some of the indicators provided in previous Annual Quality Reports have been amended/replaced by indicators prescribed by the CRG for adult critical care. Where relevant, we have also created new indicators, not currently prescribed by the CRG and retained our own CMP defined indicators.

For more information on the Clinical Reference Group for adult critical care, please follow the link below: https://www.england.nhs.uk/commissioning/spec-services/npc-crg/group-d/d16/ (external link)

ICNARC risk prediction model

ICNARC uses the ICNARC (2013) risk prediction model to calculate the risk of acute hospital mortality.

The ICNARC model was developed using data from over 200,000 admissions in the CMP Database (Harrison et al, 2007). Regular recalibration ensures that each NHS adult, general (ICU, ICU/HDU) critical care unit is being compared with current CMP data. The ICNARC (2013) model is the latest recalibration.

Please note: Data in this report may differ from those reported in participating NHS adult, general critical care unit’s comparative reports (Data Analysis Reports) for the same period.

For more information on the ICNARC risk prediction model including inclusion and exclusion criteria, please follow the link below:

ICNARC risk prediction model (external link)

Managing outliers

An outlier is a result that is statistically significantly further from the expected comparator value than would usually occur by chance alone.

ICNARC have developed guidance to ensure that potential outliers are identified through the processes of national clinical audit. The guidance document sets out the actions that ICNARC takes when data indicate that results for a site significantly deviate from the expected value.

ICNARC's guidance on the detection and management of outliers is based on Department of Health recommendations on the 'Detection and management of outliers' and supersedes ICNARC’s previous policy.

For more information on the detection and management of outliers, please follow the link below:

Detection and management of outliers - guidance (external link)

Statistical appendix

Model fit

The ICNARC model was most recently recalibrated in July 2013 using data from 242,450 admissions to 207 NHS adult, general (ICU, ICU/HDU) critical care units from January 2011 to December 2012.

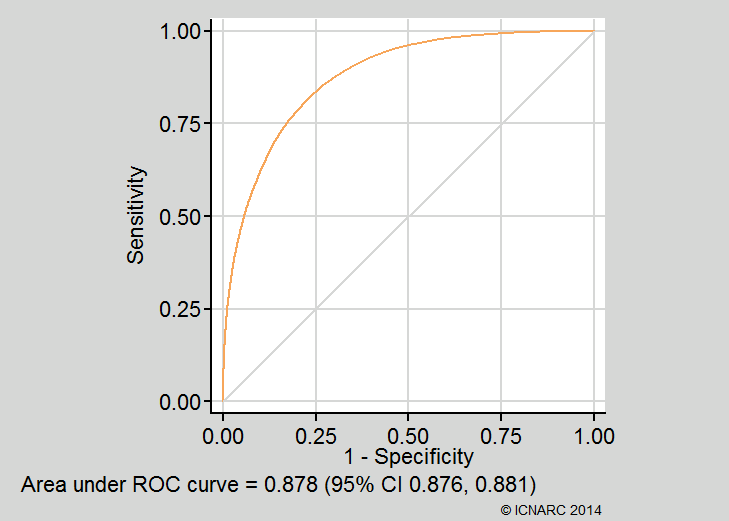

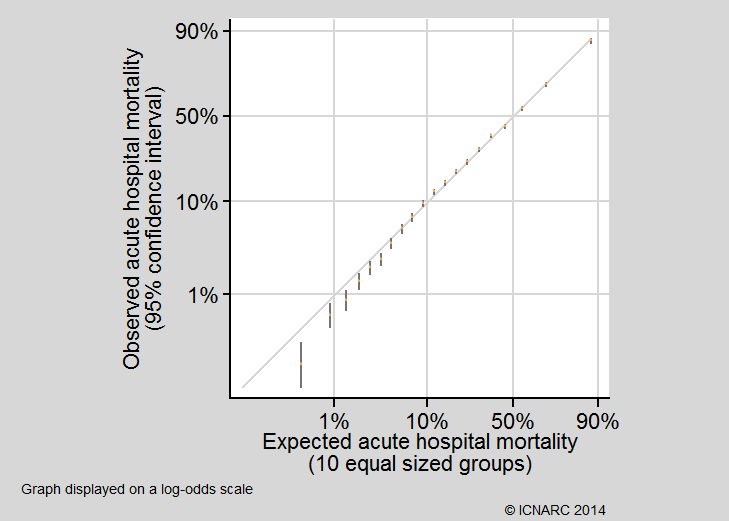

For the purpose of the Case Mix Programme (CMP) Annual Quality Report 2013/14, the fit of the model was assessed, based on 133,663 admissions to 201 NHS adult, general critical care units using the following methods:

- Discrimination was assessed using the c index,1 equivalent to the area under the receiver operating characteristic (ROC) curve;2

- Calibration was assessed graphically by dividing the dataset into 20 equal sized groups based on quantiles of predicted risk. Note that the Hosmer-Lemeshow test for perfect calibration was not used, as in a sample of this size, statistically significant departures from perfect calibration would be expected even with a well fitting model;3

- Overall goodness of fit was assessed with Brier’s score,4 representing the mean squared error between outcomes and predictions, and with Shapiro’s R,5 representing the geometric mean probability assigned to the event that occurred.

The c index (area under the ROC curve) was 0.878 (95% confidence interval 0.876 to 0.881). Calibration was qualitatively very good (see below). Brier’s score was 0.107 and Shapiro’s R was 0.713. These compare favourably with values of the c index, Brier’s score and Shapiro’s R of 0.870, 0.132 and 0.665 from the original development data6 and 0.868, 0.126 and 0.673 from previous external validation.7 The ROC curve and calibration plot are shown below:

Calculation of funnel plots

Funnel plots were calculated using the methods of Spiegelhalter (2005).8 Over-dispersion was adjusted for by estimating a multiplicative over-dispersion factor for each potential quality indicator based on 10% Winsorisation.8,9 The estimated over-dispersion factors were: Hospital mortality 1.2; Hospital mortality - risk greater than or equal to 20% 1.2; Hospital mortality - risk less than 20% 1.0; Unit-acquired MRSA 1.2; Unit-acquired infection in blood 1.7; Non-clinical transfers (out) 1.8; Out-of-hours discharges to the ward 3.0; Daytime discharges to the ward 3.0; Out-of-hours discharges to the ward (not delayed) 2.2; Unplanned readmissions within 48 hours 1.0.

References

1. Harrell FE, Califf RM, Pryor DB, et al. Evaluating the yield of medical tests. JAMA 1982; 247:2543-6.

2. Hanley JA, McNeil BJ. The meaning and use of the area under the receiver operating characteristics (ROC) curve. Radiology 1982; 143:29-36.

3. Kramer AA, Zimmerman JE. Assessing the calibration of mortality benchmarks in critical care: the Hosmer-Lemeshow test revisited. Crit Care Med 2007; 35:2052-6.

4. Brier GW. Verification of forecasts expressed in terms of probability. Monthly Weather Review 1950; 75:1-3.

5. Shapiro AR. The evaluation of clinical predictions. N Engl J Med 1977; 296:1509-14.

6. Harrison DA, Parry GJ, Carpenter JR, Short A, Rowan K. A new risk prediction model for critical care: the Intensive Care National Audit & Research Centre (ICNARC) model. Crit Care Med 2007; 35:1091-8.

7. Harrison DA, Rowan KM. Outcome prediction in critical care: the ICNARC model. Curr Opin Crit Care 2008; 14:506-12.

8. Spiegelhalter D. Funnel plots for comparing institutional performance. Stat Med 2005; 24:1185-202.

9. Spiegelhalter D. Handling over-dispersion of performance indicators. Qual Saf Health Care 2005; 14:347-51.